Accelerate your use

of data assets on

Microsoft Azure

Recognising the importance of maximising the use of data assets is on the up, however knowing where to start is causing concern for some organisations.

Here are my insights into Azure’s data capabilities, my key learnings from building data platforms on Microsoft’s Cloud offering, and why we’ve developed an Azure data accelerator.

The benefits of using Azure for data

Regardless of how Microsoft centric your organisation is, it’s worthwhile exploring what Azure has to offer for your data and AI needs. Here’s my round up of its extensive capabilities from a commercial, technical and security perspective.

Commercial

- Licensing fits well within existing MS licensing (for MS customers).

- Identity and Access management re-uses existing Azure AD information.

- Strong support models and SLAs available.

Technical

- Strong serverless offerings (Storage Accounts, Data Factory, Serverless Spark & SQL).

- Built on mature and familiar MS tools. For example, Data Factory is an Azure cloud rebrand of SQL Server Integration Services (SSIS). Azure Functions are built on top of Azure App Services. This circumvents many of the typical problems of Serverless tech (that they can lack useful features).

- Already has strong integrations with current-generation data tooling (Spark, Hadoop integration with Data lake via Synapse).

- Microsoft is a market leader in next-gen data tooling (ML & AI) with strong integration emerging as part of Fabric.

Security

- Enterprise grade security features built-in and often pre-configured.

- Azure Advisor is continuously monitoring and providing security recommendations.

- Azure AD authentication and role-based access/permissions.

Azure data services available to you

There are a plethora of data services within Azure, and once you move beyond the marketing hype there are a few key helpful services to be aware of:

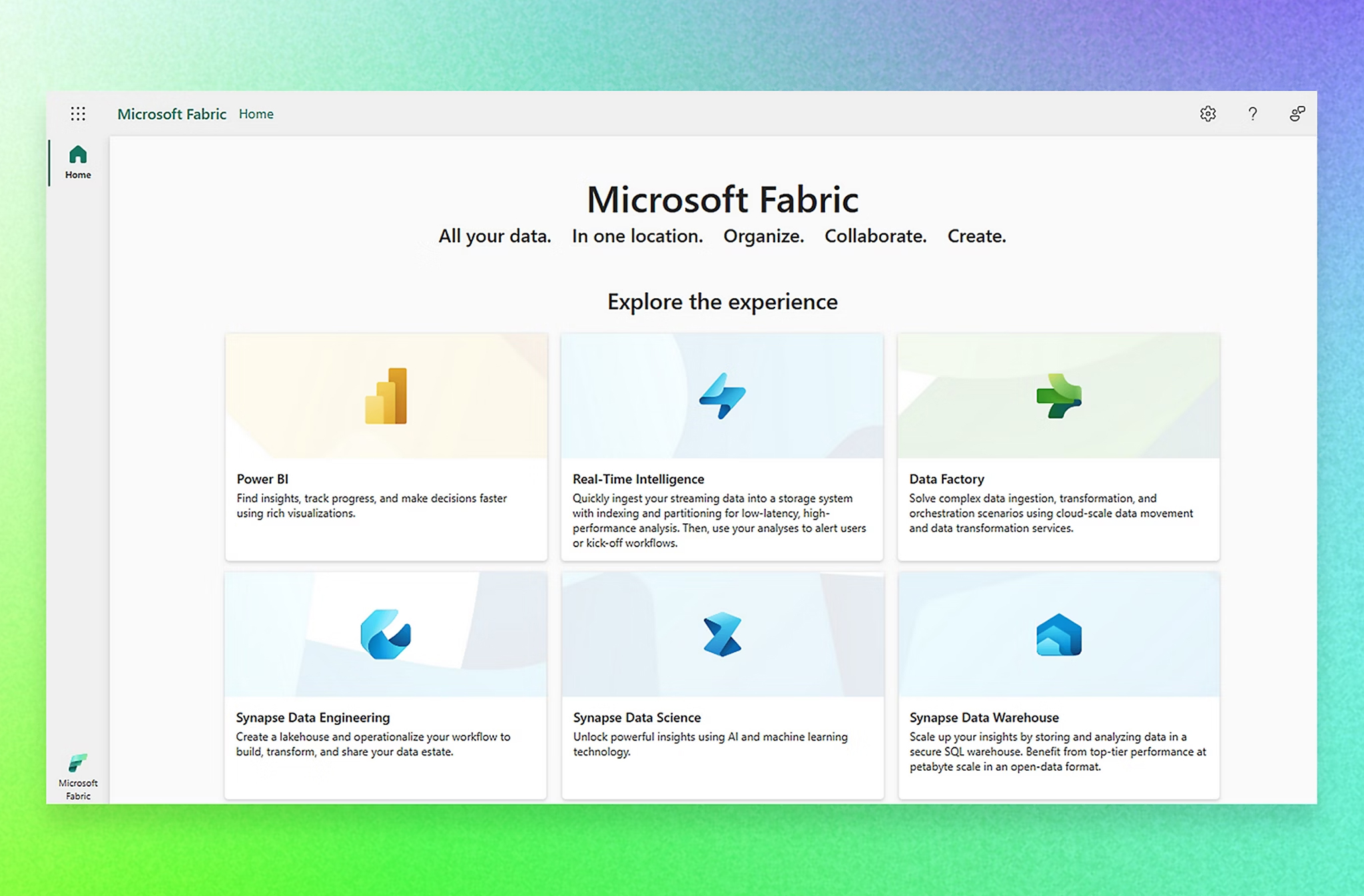

Azure Fabric

Microsoft’s new ‘wrapper’ around the data oriented parts of the Power platform, and the data tooling in Azure (which had some links as part of Synapse, but were not optimal). The relationship here is, for example that Synapse heavily relied on Azure Data Lake Gen 2 as its main storage technology powering analysis (itself built on top of Azure Blob Storage), whereas Fabric uses OneLake, a layer on top of ADLS Gen2 again but with heavier use of the Delta format instead of csv, json, parquet in Synapse. Fabric aims to be much more SaaS-y, and much more of a comprehensive one-stop-shop than Synapse.

Azure Synapse

Essentially a combination of a few Azure tools into a more cohesive PaaS platform: Data Lake Gen 2, Serverless SQL Pools, Azure Data Factory and Spark Notebooks & Jobs (although these are nowhere near as seamless to interact with as a platform like Databricks).

Azure Data Factory

SSIS for the cloud – Serverless data ingestion and transformation pipelines. Offering an optional GUI based development approach (Or code via ARM, terraform and others).

Power BI

Microsoft’s visualisation report designer software and platform, which is now part of the Power Platform. It includes options for standalone or embedded reports.

Microsoft Purview

Purview is a data governance solution that enables organisations to govern their data assets from across their IT estate. It supports data mapping, cataloguing, discovery, classification and end-to-end lineage to ensure data is accessible, trustworthy and secure.

Dataverse

What used to be known as Microsoft’s common data model, dataverse is part of the Power Platform and is a data store that lets you manage data that’s used by business applications. Users can use power apps or PowerBI to build applications using data from dataverse.

Considerations when working with Azure

Serverless / SaaS is not always easier

It is easy to think “Why would I ever step outside the fully managed world” or “the days of coding are dead when we have such great low / no code tools available”.

The reality is different

No / Low code platforms like PowerApps or Data Factory and serverless tools like Azure Functions have their place, but also their own quirks and limitations.

Even in the most user-friendly, non-developer-oriented platform ever, at some point it won’t work as expected. The big difference between code and SaaS is the tooling available to figure out why. I’m using a broad definition of SaaS here, to include anything where you can’t examine its ways of working all the way down to the source code. Even Azure Functions are still closer to a SaaS platform than they are to an open source framework running on an open source web server.

For example, Azure Data Factory offers a drag and drop way to build ingestion pipelines. But all it takes is a field mapping to be incorrect, or a quirky pagination method of an API, and you can spend days trying to figure out why – with a SaaS based interface offering you little help or ways to see under the hood, limited logging, and terse, sometimes misleading error messages, with no stack traces, limited logging, and terse, sometimes misleading error messages.

The big difference between code and SaaS is the tooling available to figure out why.

If petrol cars are replaced with electric cars, it is misleading to think that no one needs to understand internal combustion engines any more, without also recognising that a new form of expertise is needed.

You cannot run a data factory pipeline locally and you cannot attach a debugger to a logic app. In the same way as a traditional software engineer ends up with a toolbelt of debugging approaches, the same is true of new SaaS-style platforms.

A real world example would be something like: running Azure Data Factory through a proxy server to capture the exact network requests it is making to debug a tricky API scraper, sort of an Azure “Wireshark” if you will. Any marketing that promises non-experts can handle their own data ingestion, analytics etc. should be taken with a pinch of salt. It really depends on how much your business requirement is a perfect match to the expected design of the SaaS system. Technology landscapes are broad and varied, and there are only so many shapes a low code system can anticipate.

This is why honest analysis is needed, without an emotional preference for one or the other, and a recognition of the limitations of both approaches. The reality is that for many use cases, writing code is unnecessary now, and incurs a significant development and maintenance cost that holds back businesses from full modernisation. At the same time, consider this as an analogy. If petrol cars are replaced with electric cars, it is misleading to think that no one needs to understand internal combustion engines any more, without also recognising that a new form of expertise is needed.

Example use cases

Azure has the capability to support simple data use cases for dashboard reporting with small scale structured data with a few data sources – through to more complex use cases involving big data with multiple sources and AI/ML requirements.

For instance, on the simpler end of the spectrum, data can be stored in Dataverse for customers that do not need the complexity and flexibility of the Azure platform (Or have MS Dynamics based software estates).

Synapse is a great solution for mid to high-end complexity, but if Spark is going to be a major feature of the platform then Databricks on Azure might be a better fit. This is due to the following reasons:

- Spark is slow to start and interact within Synapse, but it’s very fast in Databricks

- Spark is more of a bolted-on feature to Synapse, but it is the core of Databricks

However, Databricks is a non-MS product that has been Azure-ified, whereas Synapse has much better integration with the wider Azure estate e.g. you have the ability to trigger batch jobs, use functions from ADF pipelines, and use Azure identities for data management approaches with Purview.

It’s worth noting that MS Fabric looks like it will offer similar capabilities to Databricks, so will be worth considering as an option once it’s out of preview and is proven to be reliable for production workloads.

We’ve created our own decision tool to help clients to decide what Azure data platform configurations are most suitable based on different requirements and use cases, which we’ll be looking to share in a subsequent blog post.

Our Azure Data Accelerator

Working with Azure technologies to maximise the value of your data assets, carries a number of benefits, particularly if your organisation is already Microsoft centric due to the level of integrations, security features, and value in the subscription model.

However, there is a lot of complexity in the service offering to understand, and quirks in working with services like Azure Data Factory (ADF), which do have limitations and often require workarounds, and this can create delivery risks and additional effort in build work if you don’t know what to look out for or have potential solutions you can call upon.

It is very easy to get up-and-running with low code tools, like ADF, but setting yourself up for sustainable success – by using IaC, version control, reusable pipeline components, CI/CD etc. – takes effort and knowledge, but pays dividends over the long term.

It is for these reasons that Pivotl offers our clients an Azure data accelerator, which is designed to get you up-and-running with a solid foundation on the Azure ecosystem, with up-skilling and knowledge transfer built-in from the outset, enabling you to build out and scale your data platform with or without our support.

Drop me a note to find out more.

It is very easy to get up-and-running with low code tools, but setting yourself up for sustainable success – takes effort and knowledge, but pays dividends over the long term.

Invest in the Microsoft Azure Data Accelerator

- Quickly get up and running with a solid foundation on the Azure ecosystem

- Up-skilling and knowledge transfer built-in

- Build out and scale your data platform with or without our support.

Email stuart.arthur@bepivotl.com to find out more.