Completing the

foundations

for data-first

transformation

My concluding blog in our Data-First Methodology series focuses on the final three of the four foundational blocks – essential for any organisation with ambitions to becoming increasingly data-driven over time:

- Data Management – why how good we are at managing data (or not) dictates if an organisation has good or bad quality data.

- Centre of Excellence – why it takes more than a restructure to create a BI or Data team.

- Data Platforms – why we need them, but why we should never, ever think technology alone is the answer.

DATA MANAGEMENT

“Our data is cr*p” is the phrase that I hear time after time when employees describe the state of their data. In reality however, complex organisations rarely have entirely cr*p data. They are sitting on a potential goldmine of versatile and valuable data in most areas that matter to their business but the quality is patchy. Consistently though, data quality is good where data is managed and yes, cr*p where it isn’t.

Data Management in principle is simple. An identified data set must meet a required determined standard. It’s monitored to check it hits the standard and if not, it’s fixed. How do we know what the standard is that needs to be achieved? Well, our Data-First methodology starts with deliberately identifing the tangible outputs and benefits of the data which the standards are set against. You can read all about Being deliberate with data in my second blog of The Series.

The principle is simple but the reality of embedding it across an entire organisation is much more challenging. Most traditional complex organisations are structured in siloed departmental verticals and data is managed for the sake of each individual department or vertical. Enterprise Data Management is all about flipping the approach to meet the horizontal cross cutting demands of improving user experience, insight, flow and empowerment across the organisation.

Enterprise Data Management is all about flipping the approach to meet the horizontal cross cutting demands of improving user experience, insight, flow and empowerment across the organisation

Data quality problems are much bigger than data entry errors; missing data, low frequency data, slow data all impact the quality of the data based outputs

Enterprise Data Management requires deliberate identification of the outputs, improvements and benefits which can be solved using data. With this understanding of the data needed and the quality required, the data team can effectively design the approach for bringing the data together and proactively identify the gaps in data quality.

Data quality problems are much bigger than data entry errors; missing data, low frequency data, slow data all impact the quality of the data based outputs.

At Pivotl we help organisations develop their first enterprise data management teams – a combination of people, process, policy, culture and technology change. The teams are really small, but they make a huge impact. A central eye on data quality aligned to developing a true enterprise data asset as outlined in the first blog in this series. They don’t work alone, they are part of a much bigger system of accountability, capability and capacity across the organisation working together, driving up data quality where it matters in a way that enables a single view of truth.

CENTRE OF EXCELLENCE

Highly skilled data professionals are in huge demand globally. If we want to attract, retain and optimise their capabilities in our organisations we need to create an environment where they can thrive. Developing a Centre of Excellence for enterprise data delivery is therefore a must.

It’s about creating an environment that professionalises data functions and roles in a system of robust policy, process and protocols that determine how the organisation works with data. It’s an environment that is deliberate in design and is implemented with energy and agency that is driven from the very top of the organisation. What this isn’t, is a simple realignment of BI staff and data engineers into a new team structure with a smattering of new technology and a kind word of encouragement.

In any complex organisation with Data-First ambitions a Chief Data Officer is essential. Someone accountable and capable for setting the vision, ambition and roadmap of improvement aligned to business outcomes. Also taking the reins of accountability for developing and driving a culture where the very foundations of data security and compliance and data management are assured across the organisation. When established as a stand alone role such a position is a clear and strong signal from the executive c-suite about its commitment to its enterprise data transformation.

It’s about creating an environment that professionalises data functions and roles in a system of robust policy, process and protocols that determine how the organisation works with data

A true Centre of Excellence will always put equal impetus into creating excellent users as well as developers

Data architects, engineers, scientists and analysts need to be supported by a system that allows them to easily access data they can work with to solve business challenges and realise organisational opportunities. They need to be supported in an assured system of clearly aligned ways of working and complimentary process designed around culture of common purpose.

This is an evolution over time and one that requires a continuous reality check. Many data roles do not fit well within the culture and architype of the organisation. Data engineering and data architecture are complex specialist roles that require a mindset and environment that isn’t common in many organisations today. However, these are functions that can be bought in from outside as and when required, even enterprise level data management, information governance, and data analyst roles can be acquired as a service.

The reality is the one role that cannot be effectively bought in from outside on a sustainable basis is the effective user of great data products. The informed expert decision maker or the AI empowered employee. Yet time and again this role of excellent users is overlooked as the organisation focuses on developing technical data development skills. A true Centre of Excellence will always put equal impetus into creating excellent users as well as developers.

DATA PLATFORMS / TOOLS

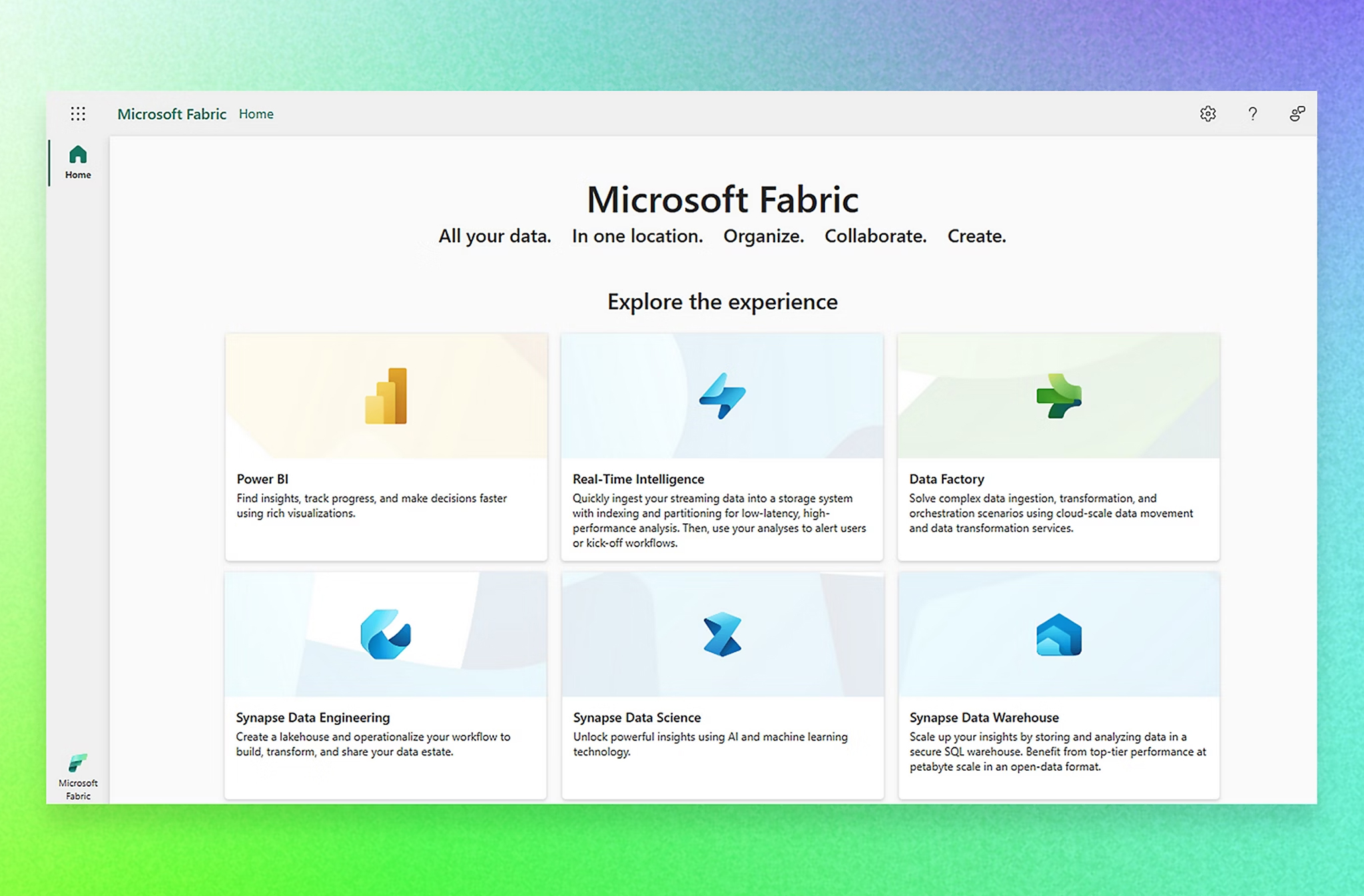

Like every other technology enabled transformation, in data, the technology is the easy bit. However, this is only true when the deployment of data tools is aligned with appropriate commitment to the three other foundations. Technology only does what we configure and programme it to do. Data platforms and tools don’t really fail, when they ‘don’t work’ it’s really a failure of the Centre of Excellence, Data Management, Compliance activity or most often not understanding need, what do we need it to do?

The ready availability of Platform as a Service and Software as a Service data tools through Microsoft, Amazon, Google, Oracle et al is a game changer for complex legacy organisations. Just about every data function and tool we will ever need is available in these major service environments. No longer do we have to build behemoth data centres to create data warehouses and platforms. We pay for what we use, when we use it, we can scale up and scale back as frequently as we need to.

However, organisations are still getting it wrong with data platforms, rushing to deploy data lakes, cognitive services, ML tooling, IoT event hubs, MDM tools etc without establishing need, capability and capacity first. None of these technologies are a panacea to solving undefined challenges or for realising vague opportunities.

None of these technologies are a panacea to solving undefined challenges or for realising vague opportunities

Applying the principles of deliberate and foundational thinking are the difference between success and failure

These tools add incredible value when deployed in an architecture designed to meet a clear set of insight, data flow, automation and transactional objectives defined as actual work packages. Within an environment, where deliberately identified needs of data are aligned with investment in the capacity and capability of the organisation to scale the foundations of an appropriate Centre of Excellence, Enterprise Data Management and assured Secure and Compliant ways of working.

At Pivotl we will never design a data platform architecture or deploy a tool or service for a client without understanding the outputs it must enable. As well as the level of the foundations required to support these outputs in development and once live in the business. This is how the Data-First methodology underpins our thinking in every project or programme no matter the complexity or scale. Applying the principles of deliberate and foundational thinking are the difference between success and failure.