Future-proofing your

organisation with a strategic

approach to data integration

As organisations increasingly rely on data to make decisions, formulate business strategies, and fuel AI initiatives, the architecture that supports this data becomes increasingly important – and even more so when legacy systems figure prominently (don’t worry, I will explain why!).

A lot of executives still equate data expertise with building reporting tools and dashboards, focusing too much on the front-end visualisation and not enough on the underlying architecture and infrastructure that is the spine of the organisation – by enabling effective, strategic data integration and data management capability.

Those organisations where legacy technology figures most prominently are often plagued by serious delivery issues and suffer general inertia – there is often a feeling of despair amongst staff because they feel stuck in legacy hell without viable options. I often face comments like: “We can’t move to the cloud because of system x”.

A data-first approach to digital transformation, built around data integration excellence, is essential for overcoming the constraints imposed by legacy systems and providing a future-proof architectural foundation – by creating different viable technology pathways – that enable future innovation and growth, or simply offering the space and viable options for replacing legacy or poorly performing systems at a sustainable pace.

A strategic approach to data, should matter to CEOs as much as it does to technical leaders because of the profound impact it can have in terms of an organisation remaining relevant, innovative, and future proofed

the need for a robust data integration strategy that not only addresses current limitations but also lays the foundation for future growth.

The challenge of legacy

Legacy systems (e.g., outdated, monolith systems still being relied upon for critical business operations), present several key challenges, for example:

- Siloed data: Legacy systems frequently operate in isolation, making it difficult to access and analyse data across the organisation. This siloed approach limits collaboration and often hinders generating holistic business insights.

- High maintenance costs: Maintaining outdated technology can be costly, diverting resources away from innovation and modernisation needs. I have witnessed places being held to ransom because of this, and sadly it is an old consulting trick, which some of the bigger players have perfected over the years!

- Limited scalability: As businesses grow, legacy systems struggle to keep up, leading to performance bottlenecks and operational inefficiencies. Attempts to migrate to the cloud in like-for-like ways result in spiralling cloud costs.

- Integration difficulties: Legacy systems often lack the flexibility to integrate with new technologies, which can stifle innovation and slow down response times to delivering against customer needs.

- Security issues: Legacy systems are synonymous with using unsupported components with known security flaws that can be exposed. These flaws are often difficult to patch, if patching is even possible at all.

- Poor usability: Through system constraints and often poorly designed user interfaces, users are forced to work in a particular way, which is exacerbated by the inability to redesign the front-end of applications due to their monolithic nature.

These challenges highlight the need for a robust data integration strategy that not only addresses current limitations but also lays the foundation for future growth. I like to consider the cumulative effect of these issues as a lack of ownership over your own data assets – not a great place for a CEO to be in from a risk perspective.

The role data integration can play in future-proofing architecture

Providing seamless data connectivity

Data integration services enable you to connect disparate data sources, both legacy and modern, creating a unified view of the organisation’s data landscape. This seamless connectivity helps overcome the siloed nature of legacy systems, ensuring that all data is accessible and actionable.

Supporting a data fabric architecture

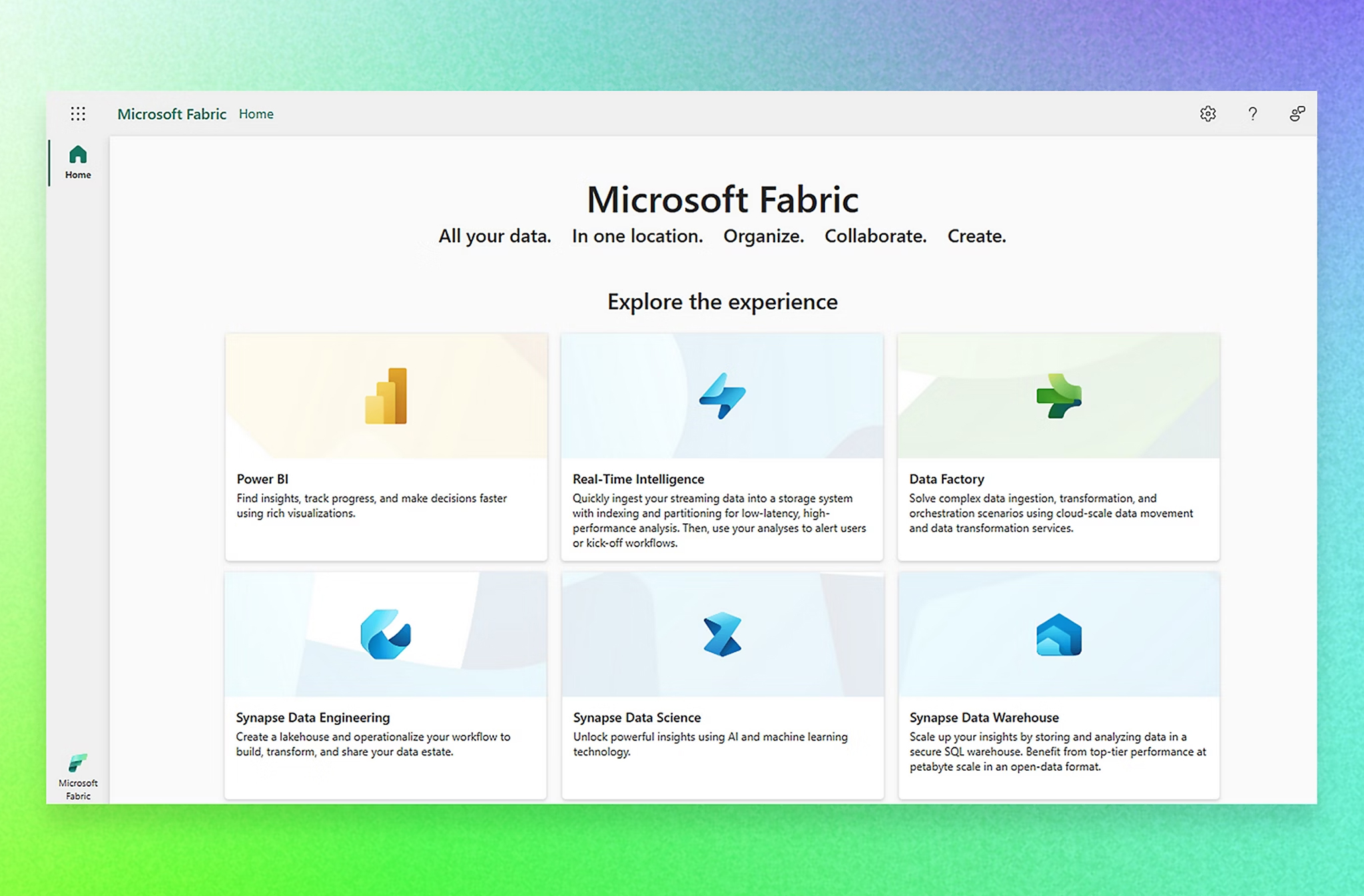

By implementing a data fabric (not to be confused with Microsoft’s Fabric service) – a cohesive architecture that integrates data across various environments – organisations can achieve real-time data access and insights. Data integration plays a crucial role in data fabric architecture, facilitating the flow of information between on-premises and cloud solutions, thus enhancing scalability and flexibility. This unified approach not only helps you mitigate the limitations of legacy systems but also supports flexible, agile architecture, which can be easily iterated on.

Enabling a data mesh approach

Adopting a data mesh architecture emphasises domain-oriented ownership and accountability, closely aligned to product centric organisational models. With effective data integration, cross-functional teams can manage their own data products without being constrained by legacy systems. This decentralisation fosters innovation and responsiveness, as teams can quickly adapt data solutions to meet specific business needs without hard dependencies on centralised data teams.

Enhancing data governance and quality

Effective data integration helps organisations manage metadata and enforce data governance practices, leading to improved data quality and consistency. By providing clarity on data lineage and ownership, organisations can build trust in their data, essential for regulatory compliance and strategic decision-making. This transparency is particularly important when transitioning from legacy systems, as it ensures that data integrity is maintained throughout the process.

Future-proofed architecture

A flexible architecture, fuelled by data integration, is key to adapting to evolving business requirements. Data integration enables a modular approach, allowing organisations to introduce new technologies or data sources without disrupting existing operations (avoiding breaking changes). This flexibility is crucial for overcoming the limitations of legacy systems, ensuring that the architecture can evolve as the organisation scales and changes, simplifying the adoption of new technologies or identifying and exploiting new business opportunities. The adoption of industry standards and principles, such as those outlined in the Technology Code of Practice, for example, supported by robust, automated engineering practices, are often synonymous with solid, adaptable architecture.

Lessons learned from our agile architecture work with a leading University

Introduction

We conducted a comprehensive enterprise architecture review, design, and roadmap for a leading University to tackle challenges in its IT landscape and explore sensible opportunities for modernisation – what they coined as an agile architecture. This work involved a short spike of rapid technology discovery: analysis, stakeholder engagement, and objective assessment against cloud and data best practices.

By using agile methodologies, our team and the client co-designed a future architecture state and created a high-level technology roadmap, centred around effective use of data (integration being key) and adopting Azure/365 by default, as a strategic imperative.

Key challenges

Key challenges cited by the client included:

- Hosting challenges: While the current hosting environment was well understood, optimal solutions and ways forward on the Azure/365 cloud environment were unclear to the organisation, as were costs and sequencing.

- Data distribution issues: Data replication occurred in a point-to-point, ad-hoc manner, complicating the integration landscape. This resulted in difficulties generating insights i.e., reliance on spreadsheets.

- Underutilisation of tools: An enterprise architecture tool existed but was not effectively used, limiting access to vital information about the architecture landscape – leaving the organisation blind to potential risk.

Key observations

The in-depth review activity we completed highlighted several critical observations:

- Excessive infrastructure: Overprovisioning of on-prem infrastructure resource led to inefficiencies and unnecessary costs.

- Technical diversity: The range of platforms and applications – from different vendors/across different stacks – hampered effective management, with no clear technology preference in place.

- Application sprawl: The number of applications – including extensive shadow IT (i.e., not centrally endorsed or managed SaaS apps) – complicated governance.

- Security concerns: Existing security measures were inadequate, particularly important due to the size of the attack surface, and the known unknowns.

- Lack of golden record: Data for students held across multiple systems in different shapes and formats with no centralised, high-quality data asset available for downstream analysis.

- Too many siloed data sources: Multiple on-prem data integration platforms were evident, including brittle point-to-point integrations, which carried maintenance overheads. There was a lack of support for SaaS integration patterns.

Future state vision

The client wanted to migrate from on-prem servers to a Microsoft 365/Azure centric cloud environment, focusing on security, scalability, and efficiency. The vision emphasised the need to deliver digital transformation efforts based on solid cloud and data foundations, with key goals including:

- De-coupling data from systems and applications.

- Unlocking real-time insights.

- Automating processes to enhance educational outcomes.

- Leveraging AI for future advancements.

Underpinning the realism of delivering this vision was the future architecture state, which included Pivotl’s data platform accelerator – a pre-configured platform to centrally manage and integrate data across an organisation, designed and built using cloud native Azure/Databricks technologies.

To guide this transition, we established several core architecture principles, including treating data as a strategic asset and decoupling data, so that it’s origin, format, and hosting environment is irrelevant – freeing up the organisation to migrate services and data without breaking changes.

Key lessons learned

Be strategic about data integration: Data integration cannot be treated as an afterthought and must be treated as a strategic platform-oriented approach – based on open standards and self-service integration models.

- Focus on data integration: Prioritise seamless integration across diverse data sources to eliminate silos, enhance accessibility, and de-couple your data assets from systems and applications – this provides options and opportunities.

- Establish a clear data governance framework: Define ownership, data quality standards, and security protocols to ensure data reliability and compliance.

- Prioritise a ‘golden record’ strategy: Create a single source of truth for critical data to eliminate discrepancies and improve decision-making.

- Adopt agile data practices: Implement agile methodologies and DataOps approaches in data management to quickly respond to changing needs without compromising stability and safety or experiencing breaking changes. Continuously evaluate integration processes to identify bottlenecks and areas for improvement.

Key results

The architecture review and subsequent design enabled subsequent build work, aimed at catalysing significant, transformative change:

- Streamlining IT infrastructure: Transitioning to a cloud-first strategy reduces maintenance overhead and improves resource allocation.

- Enhancing data accessibility: A focus on data integration facilitates easier access to reliable data across departments, promoting informed decision-making.

- Strengthening security posture: A modern cloud environment enhances security measures and reduces vulnerability to breaches.

- Enabling digital transformation: The foundational architecture supports ongoing digital initiatives, allowing the exploration of AI and automation opportunities that enhance educational outcomes.

- Empowering staff and students: A more efficient technology ecosystem enables staff and students to achieve more with less, fostering innovation and adaptability.

Data integration presents a strategic advantage

The case study presented could serve as a valuable reference point for other organisations embarking on similar data-first, digital transformation journeys. For example, data clearly has major benefits beyond the narrow confines of analytical reports and dashboards, it’s a strategic imperative and must be woven into the fabric of your organisation, including being embedded into digital transformation efforts from the outset.

In an era where data is the lifeblood of organisations, the success of any data integration initiative hinges on taking a platform oriented, strategic approach. A data-first approach to enterprise architecture is essential for truly leveraging the power of data. By embracing modern integration practices, organisations can overcome the challenges of legacy technology, enhance their operational efficiency, and promote a culture of data-driven decision-making.

A strategic approach to data, such as this, should matter to CEOs as much as it does to technical leaders because of the profound impact it can have in terms of an organisation remaining relevant, innovative, and future proofed – and such technology matters can become existential e.g., I have seen several cases of traditional middleware solutions throttling the delivery of major ERP upgrades or transformation programmes, which have literally halted business operations. As we’ve explained, there are now better ways of doing integration without dependencies on expensive middleware platforms and vendors.

Ultimately, the journey toward effective data integration is not just about generating data insights; it’s about taking control of and decoupling your data – regardless of where data is hosted, on what platform, or in what format becomes irrelevant because the integration layer provides an abstraction, freeing you to migrate services and data without breaking changes.

A data-first approach to enterprise architecture is essential for truly leveraging the power of data. By embracing modern integration practices, organisations can overcome the challenges of legacy technology, enhance their operational efficiency, and promote a culture of data-driven decision-making.