Putting the rigour into

data and AI engineering practices.

In a time of AI-enabled development and unprecedented cyber-attacks, robust engineering practices matter more than ever.

Technology leaders, particularly CTO/CIOs come in different guises and offer focus in different areas; let’s just generalise to say that some gravitate more to the business or the politics, others to the creation process or the code and every bit in between.

Failed or difficult to deliver/support software systems or worse still cyber security incidents are a direct consequence of neglecting to care enough about engineering practices often manifested from poor business ethics and management dysfunctions.

We need to look no further than the Horizon scandal to see the dire, life changing consequences, but how does this apply to data or AI work? We’ve had a glimpse as Snowflake has been linked to two major security events involving Santander and Ticketmaster where confidential information has been stolen. With the lower barriers to entry to being an engineer – provided by Gen AI – are we asking for more trouble?

Through my own experiences with data, I have found that shifting testing to the left (starting testing from the outset) and testing upstream data sources can help you to detect issues early and overcome hidden issues in data sources, transformation, or ingestion logic. Approaches like Extreme Programming (XP) and Test-Driven Development (TDD) are more important than ever despite being underappreciated when compared to popular agile approaches such as Scrum, Kanban or dare I say it SAFe.

In this blog post, I explore its importance to data and AI, and how it’s a vital consideration of how you execute on the data platform strategy I explored in my previous post in my Data Platform Strategy series.

XP is often synonymous with TDD, but it is about so much more than that in terms of its underlying philosophy. XP values communication, simplicity, feedback, courage, and respect.

Introducing XP values and practices

Coming from a cloud and software engineering background (I’ve done this for longer than I’d like to admit), I’m used to working with teams that take a ‘write tests before code’ approach as part of the development lifecycle and it’s always been a non-negotiable to me regardless of business pressure or management dysfunctions.

XP is often synonymous with TDD, but it is about so much more than that in terms of its underlying philosophy. XP values communication, simplicity, feedback, courage, and respect.

Each value contributes to a cohesive, quality inducing development environment:

- Communication: Ensures clear, concise, and consistent understanding among team members, typically working in small, multidisciplinary teams.

- Simplicity: Encourages simple, straightforward solutions to complex problems.

- Feedback: Offers continuous insights into the development process to improve morale, cohesion, and productivity.

- Courage: Empowers engineers to make necessary changes without fear, blame or assigning fault.

- Respect: Promotes a collaborative, diverse, and supportive team environment.

From a practical perspective, teams that embrace XP will typically adopt a series of practices that are relevant to them, such as Continuous Integration (CI), Pair Programming, and Coding Standards for example; although TDD is one of the key practices.

A straightforward way to describe TDD is through three steps ‘Red, Green, and Refactor’:

- Red: Create a test that fails e.g., define functional needs.

- Green: Write code that passes the test e.g., satisfy functional needs.

- Refactor: Clean up and improve the code without breaking functionality.

How XP-style approaches apply to data and AI

A TDD based approach is not relevant for every aspect of data or AI projects because by its nature parts of data science work, for example, is more exploratory in nature and there is little value in writing tests if you don’t know what the expected output is.

However, I think it’s applicable in ways that might not be obvious at first and it still is underutilised in the data industry and like in software it will take time to become more commonplace.

Here are some of the ways XP practices, such as TDD, can be applied to data and AI work:

Data engineering

TDD can help to avoid breaking changes in data engineering work, which is a common issue in the data industry. To create robust data pipelines:

- Data validation: Creating tests that validate data quality, ensuring accuracy and consistency as part of a data quality strategy.

- Pipeline reliability: Running automated tests can quickly find issues in data pipelines, reducing downtime and enhancing reliability.

- Code quality: By taking a test first approach, engineers are encouraged to write simple, maintainable code that is continuously improved.

Data Science

While data science emphasises exploratory analysis, TDD can still offer significant advantages:

- Model validation: Checks that models perform as expected under different scenarios, promoting greater trust in data outcomes.

- Reproducibility: Tests traceability and repeatability of model development, making experiments reproducible.

- Integration testing: Ensures that the components of a data science pipeline work seamlessly together.

Artificial intelligence

AI systems, especially those based on machine learning, can benefit from TDD:

- Model accuracy: Continuous testing supports model accuracy by finding data drifts and other issues early on.

- Scalability: When AI/ML models grow larger, robust testing practices ensure they are still robust and scalable (promoting production ready solutions).

- Ethics: Tests that ensure AI/ML models adhere to ethical guidelines/standards, preventing unwanted and unexpected, biased outcomes.

Integrating DevSecOps and Cyber practices

As data and AI systems become increasingly popular and important to businesses (resulting in an increase in cyber-attacks), the need for robust security measures grows.

Note; data platforms or centralised data stores being attacked could have more devastating consequences than data that is held across several different, independent systems, which is why this is an increasingly important concern.

DevSecOps integrates security practices into a typical DevOps/DataOps workflow, ensuring that security is a core component of the development process:

- Data protection: Ensures sensitive data is encrypted and access is controlled, protecting against breaches.

- Threat mitigation: Regular vulnerability assessments and threat modelling to help find and mitigate risks.

- Compliance: Helps satisfy stringent regulatory requirements, avoiding legal issues and supporting customer trust.

Note; The testing approaches described focus on business or user requirements; however non-functional requirements (NFRs), such as performance, monitoring, and data quality need to be considered as part of a holistic, left shifted approach to testing.

data platforms or centralised data stores being attacked could have more devastating consequences than data that is held across several different, independent systems, which is why this is an increasingly important concern.

Expanding on testing approaches, good practice, and anti-patterns

Maintaining reliable, accurate data pipelines is crucial in data projects. In software engineering, teams strive for close to full test coverage of code; however, this is not realistic in data engineering because of the complexities of data pipelines – you need to be a lot more focussed, specific, and selective for testing approaches to add real value.

In software engineering, an often-used testing approach is the testing pyramid. It covers the testing layers from unit testing through to manual testing, putting greater emphasis and effort on faster unit tests and less effort on slower manual testing.

This doesn’t apply or map in a linear way to data work because data work requires both code and data testing and a much more selective approach to where you place effort e.g., placing greater emphasis on data sources and interdependencies.

In data most of the testing effort should be on the data integration and validation testing types (flow, source, and contract tests, for example). This is because these areas involve dependent data sources and carry the highest risk if data is missing once migrated or mappings are wrong, for example, or data integrity is in question, and it helps to avoid breaking changes if a source system changes its schema.

Note; other tests are still important and applicable, but it is about risk-based prioritisation and return on effort.

Testing core data architectural layers

Higher risk or critical areas should be identified/prioritised according to business priorities, risk assessments and domain knowledge. Engineers can therefore focus their efforts on ensuring the most critical aspects of the data pipeline are thoroughly tested.

To explain approaches to distinct types of testing, in simple terms we can think of a data platform as being decomposed into three core architectural layers:

- Source: Receiving, validating, and storing data from various sources, such as data files, APIs, or other mechanisms.

- Integration: Processing, validating, and integrating different datasets.

- Functional: Presenting data to business intelligence systems and dashboards.

Data quality starts and ends with data ingestion from source systems. I’ve seen lots of issues with broken data pipelines or systems caused by an interface being changed where there is a dependency.

The key to effective integration testing is to define interface contracts or data contracts (data contracts call for a dedicated blog post) early on in collaboration with other teams.

Development should be avoided without a clear contract in place that outlines the expectation for data structure, quality, and business rules.

Data integration is critical to the success of an organisation, it enables interoperability, actionable insights, and outlines business risk. The integration layer is where data is processed, transformed, and combined.

Effective integration testing is about writing tests that ensure that ingested data matches the expected formats and agrees to defined schemas. This helps to find inconsistencies or issues that could affect downstream analytics or applications. Tests should be triggered when a pipeline changes to underlying schema changes, for example.

The integration layer can become large and complex, so tests should be based on predefined business logic rather than user experience alone or gut feel. Integration logic and presentational logic should also be delineated as mixing these two different concerns can create complexities.

The last stage of the lifecycle is the presentational layer where data is presented to users in reports or dashboards. Functional tests are used to verify the quality of the delivered output.

Functional tests should be defined upfront with end users and are used to find expectations in terms of data quality, acceptable thresholds, and expected behaviour.

Where functional testing can lose value is if the tests become too technically focused and don’t consider user needs and functionality, or where tests become too generic e.g., where they broadly try and check that everything works.

Higher risk or critical areas should be identified/prioritised according to business priorities, risk assessments and domain knowledge.

A case study of where it can pay dividends

We worked with a government department that had challenges with data integration and quality with several critical systems due to source systems having inconsistent and highly changeable data schemas, which the middleware solution wasn’t handling effectively, resulting in lots of manual workarounds and a lack of trust in the data.

We designed and built a new integration layer, taking a platform-oriented approach, which was underpinned by robust engineering practices. We applied contract, flow, and integration tests as part of automated data pipelines, which enabled us to gain visibility of source system data issues, help to resolve them, and ensured the system remained stable, giving confidence during the transition to live. Without this substantive, left shifted approach to testing it would have been impossible to deliver the solution safely.

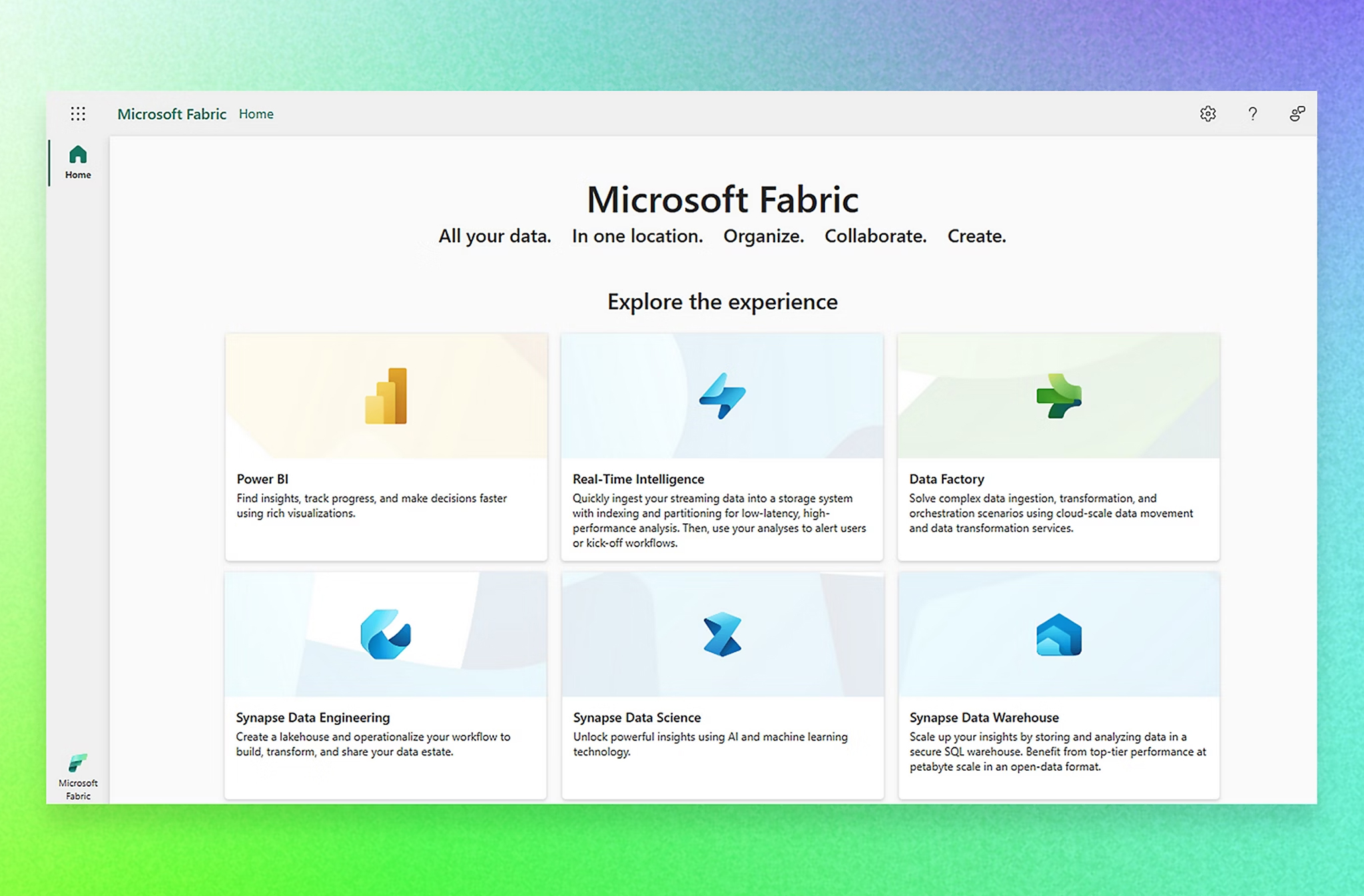

Testing practices with data platforms

In my last blog post, I introduced data platforms, and one of the benefits of data platforms when adopting robust engineering practices is standardisation and consistency.

Platforms like Azure, Databricks, and Snowflake each have standardised ways and integrated tools for writing unit tests for notebooks or data pipelines, which is inherently more efficient than having multiple teams doing it in diverse ways with different tools.

In some cases, using specialist tools for data testing can be useful, and DBT is one such tool that is used for data testing and is often supported natively in popular data platforms.

In some cases, using specialist tools for data testing cabe useful, and DBT is one such tool that is used for data testing and is often supported natively in popular data platforms.

CLOSING THOUGHTS

XP-style engineering practices can, do, and should apply to the rapidly evolving data and AI landscape as part of an overarching approach to quality and security. They enhance quality, reliability, and maintainability, fostering a robust, and high-quality development environment. Integrating DevSecOps and cybersecurity further strengthens these systems, ensuring they are highly secure and compliant.

By embracing XP-style testing practices and prioritising security, organisations can build robust, scalable, and user-centric data and AI solutions, driving innovation and keeping a competitive edge without introducing unnecessary, irresponsible business risk.

To sum up, these are characteristics of an effective approach to testing data and AI solutions:

- Pipeline tests: Data quality testing should be baked into data pipelines, integrated in a way that’s seamless and accessible.

- Shifted left: Data should be tested in its raw format, when its transformed, and throughout its lifecycle.

- Collaborative: Data experts should proactively test data to build trust between data teams, stakeholders, and users.

Implementing robust engineering practices results in:

- Increased quality: TDD ensures high-quality code by finding errors early, reducing the cost of fixing errors and future support costs.

- Faster delivery timescales: Iterative development enables quicker releases, allowing businesses to respond promptly to rapidly changing market needs.

- Better collaboration: Cross-functional teams work better together, aligning technical solutions with business goals.

- Greater flexibility: The adaptability of XP practices allows for better handling of changing requirements, crucial in data and AI projects.

- Reduced risk: Continuous integration and testing reduce the risk of major failures, protecting the business from costly downtime or major incidents.

Of course, not all businesses that don’t apply good engineering practices or testing approaches will be hacked but it goes hand in hand with effective cyber security posture.