Why a Data Fabric May Be the Key to Scaling Your Enterprise AI

Despite significant investments in AI initiatives, many enterprises struggle to scale AI beyond isolated pilot projects. A recent BCG study found that 74% of companies are currently struggling to scale AI adoption and achieve value. The root cause of this delivery challenge frequently lies not in the AI models themselves, but in the underlying data infrastructure that supports them. Organizations face a wide collection of data-related obstacles that combine to impede AI adoption at scale.

In recent weeks I’ve had the chance to discuss many of these issues with my friends at Pivotl as they work with a variety of clients to define and implement data infrastructure to manage their complex service delivery needs. I’ve learned a great deal from those interactions. Now, I have also had the opportunity to look at the data virtualization solution provided by Denodo and hear first-hand from their customers using the Denodo platform. It’s been quite revealing.

So, based on these experiences, what have I been learning about the difficulties enterprises face as they seek to deliver AI-at-Scale? How do approaches based on defining a data fabric across that complex infrastructure help overcome these data management concerns?

To answer these questions there is much to unpick regarding the relationship between business strategy and data management infrastructure, the need for a clear mission focus when investing in technology, and the importance of enhancing digital technology skills in executive managers. However, a foundation for all of these discussions is to start with a clear perspective on the depth of the data challenges faced by most organizations today, and to outline an organizational model that will allow us to define, assess, and mature our approach to data management.

The Data Challenge: The Hidden Barrier to AI at Scale

There is no doubt that scaled adoption of AI brings significant data issues. Foremost among these challenges is data fragmentation. Enterprise data typically exists in disparate silos, created by different business units using incompatible systems across multiple time periods. This fragmentation results in inconsistent data models, conflicting taxonomies, and divergent business rules. When AI initiatives attempt to leverage this fragmented data landscape, they encounter significant barriers to integration that slow development and compromise results.

Data quality issues compound these challenges. Machine learning models require clean, consistent, and well-structured data to produce reliable outcomes. However, many organizations lack robust data quality management processes, resulting in incomplete records, inconsistent formats, and erroneous values. These quality deficiencies propagate through AI systems, diminishing model performance and undermining stakeholder confidence in AI-driven insights.

Governance deficiencies further constrain AI scalability. Inadequate data lineage tracking makes it difficult to understand data provenance and transformations, while poorly defined data ownership creates uncertainty about who can authorize data usage for specific AI applications. Regulatory compliance concerns regarding data privacy and ethical AI usage introduce additional complexity, particularly for organizations operating across multiple jurisdictions with varying regulatory requirements.

The consequences of these data management shortcomings are substantial. AI initiatives typically devote substantial resources to data preparation rather than model development (so-called “data cleaning”). This inefficiency not only increases costs but also extends time-to-value, diminishing the competitive advantage that AI might otherwise provide. Additionally, the resulting models are often quite limited in how they can be applied, functioning adequately within narrow use cases but failing to deliver value across broader enterprise contexts.

Data Fabric: A Unified Approach to Enterprise Data Management

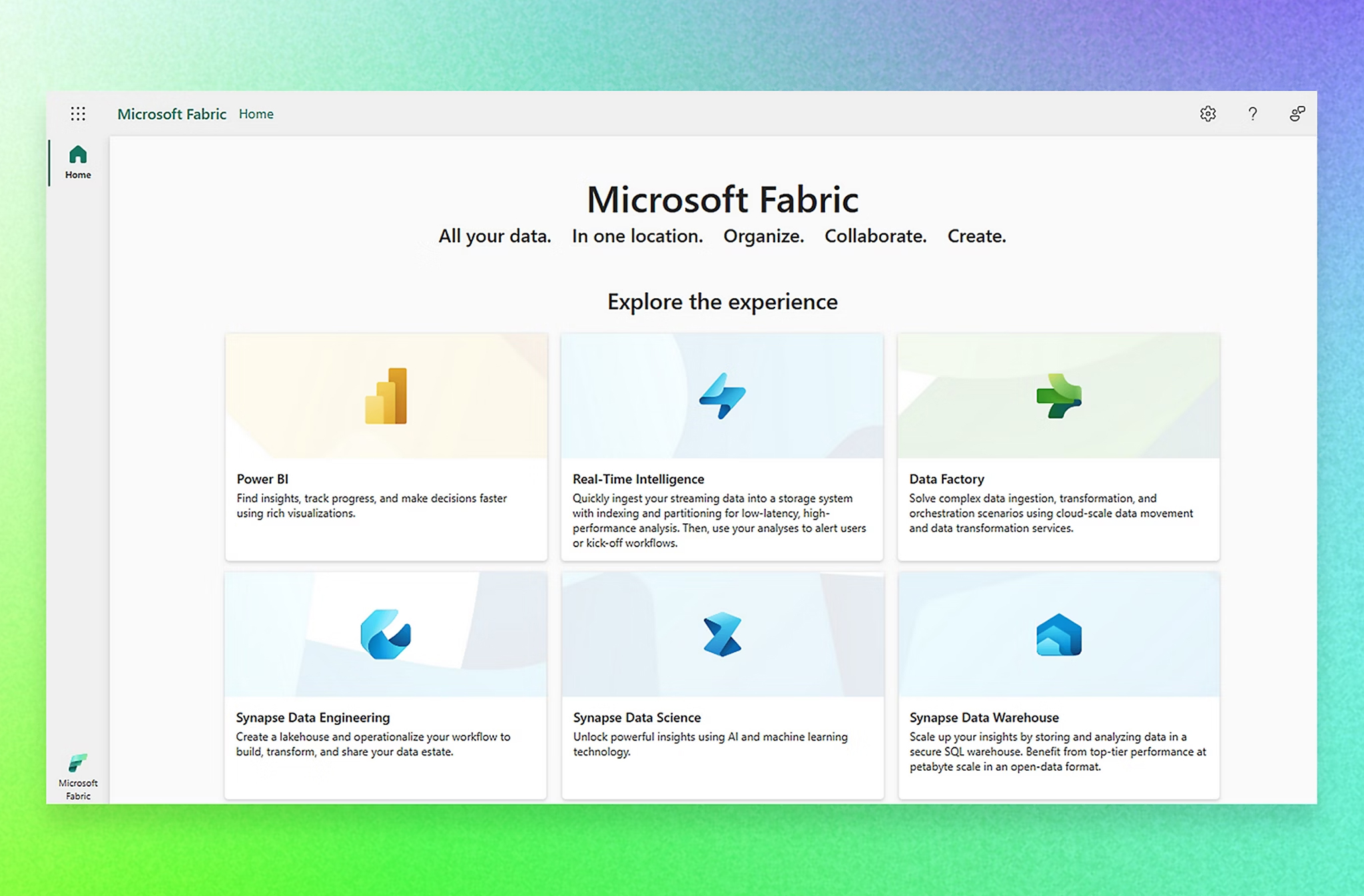

The data fabric architecture represents a strategic response to these challenges. It is an organizational model that offers a unified, integrated approach to data management to help overcome traditional data infrastructure limitations. At its core, a data fabric creates a coherent data ecosystem that spans disparate sources, formats, and locations while maintaining consistent governance, security, and accessibility.

Unlike conventional data warehouses or lakes that physically consolidate data in centralized repositories, a data fabric employs a more flexible approach. It creates a semantic layer that overlays existing data sources, establishing standardized data models, metadata, and access methods without necessitating comprehensive data migration. This approach acknowledges the distributed nature of enterprise data while providing unified logical access.

Modern data fabric implementations leverage advanced technologies to deliver their capabilities. Knowledge graphs create meaningful semantic relationships between data entities across sources. Intelligent metadata management automatically discovers, catalogs, and classifies data elements. Machine learning algorithms continuously analyze data usage patterns to optimize data delivery and access methods. API-driven integration enables consistent data services across diverse applications and platforms.

The architecture offers several distinctive advantages over traditional data management approaches. It provides context-aware data access, delivering information tailored to specific business needs and user roles. It enables real-time data integration, combining historical data with current transactions to support timely decision-making. Perhaps most importantly, it creates a self-service environment where business users and data scientists can discover, understand, and utilize enterprise data without extensive technical assistance.

Data Fabric as the Foundation for Enterprise AI

The architectural characteristics of data fabrics align well with the requirements for scaling AI across the enterprise. In practice, this alignment manifests in 5 critical dimensions that collectively enable more effective AI deployment.

First, data fabrics dramatically reduce the data preparation burden that typically consumes significant AI project resources. By providing consistent, curated data access across disparate sources, they eliminate redundant extraction and transformation processes. This efficiency redirects resources toward model development and refinement rather than data wrangling, accelerating time-to-value for AI initiatives.

Second, data fabrics enhance model quality by improving data consistency and completeness. The unified semantic layer normalizes data representations across sources, ensuring that AI models receive standardized inputs regardless of the underlying data origin. This consistency translates directly into more accurate model predictions and more reliable AI-driven insights.

Third, data fabrics facilitate responsible AI through comprehensive governance and lineage tracking. They maintain detailed information about data provenance, transformations, and usage authorizations, creating transparency that supports regulatory compliance and ethical AI practices. This governance foundation is increasingly essential as organizations navigate complex regulatory requirements surrounding AI deployment.

Fourth, data fabrics enable AI models to access a broader range of enterprise data, expanding their potential applications. Rather than functioning in isolated data domains, AI systems can leverage comprehensive enterprise information, identifying patterns and relationships that would remain invisible in more limited data contexts. This expanded scope creates opportunities for novel AI applications that deliver significant business value.

Finally, data fabrics support the continuous learning and adaptation essential for sustainable AI advantage. By monitoring data patterns and usage, they can automatically detect changes in underlying data characteristics that might affect model performance. This capability enables proactive model retraining and adaptation, maintaining AI effectiveness as business conditions evolve.

Balancing Benefits and Challenges of Data Fabric Implementation

While data fabrics offer many advantages for AI initiatives, organizations must carefully consider the associated challenges and costs before implementation. The introduction of an additional semantic layer between data sources and AI applications creates potential performance implications that require thoughtful architectural planning. Data access through fabric intermediaries may introduce latency compared to direct source access, particularly for high-volume, real-time applications. Organizations must carefully evaluate these performance considerations against business requirements, taking advantage of appropriate caching mechanisms or optimized query paths for time-sensitive AI workloads.

Furthermore, data fabric implementations create dependencies on the intermediate semantic layer that must be properly managed. This dependency introduces a potential single point of failure that requires robust resilience measures, including redundant components and failover mechanisms. Organizations must also assess the skills required to maintain and evolve this semantic layer, recognizing that data fabric expertise combines traditional data management capabilities with more specialized knowledge of semantic modelling, metadata management, and distributed data integration. This expertise gap often requires significant investment in training or external resources during initial implementation phases.

And finally, cost considerations also merit careful attention when evaluating data fabric implementations. While data fabrics can reduce long-term costs by eliminating redundant data integration efforts, the initial implementation may demand substantial investment in technology, processes, and organizational alignment. Organizations must develop comprehensive business cases that account for both immediate implementation costs and longer-term operational implications, including infrastructure requirements, licensing fees, and specialized personnel. These financial considerations should be evaluated against expected benefits, with clear metrics established to monitor return on investment as the data fabric matures.

Taking a Data Fabric Approach

While the benefits of a data fabric architecture are clear, implementation requires thoughtful planning and execution. Digital leaders on this journey require three concrete steps – Examine, Evaluate, and Establish:

- Examine: Conduct a data ecosystem assessment. Begin by mapping your existing data landscape, identifying critical data sources, current integration mechanisms, and governance practices. Document pain points in current data access and utilization, particularly focusing on obstacles encountered during previous AI initiatives. This assessment creates visibility into the specific challenges that a data fabric must address within your organizational context.

- Evaluate: Develop a minimum viable data fabric. Rather than attempting comprehensive implementation immediately, identify a specific high-value business domain where improved data integration would deliver significant benefits. Focus on primary data sources and implement core data fabric capabilities—semantic modelling, metadata management, and API-based access—within this limited scope. Use this focused implementation to demonstrate value, refine approaches, and build organizational support before expanding to broader enterprise coverage.

- Establish: Define cross-functional governance mechanisms. Data fabric implementation goes beyond traditional technology boundaries, requiring collaboration across business, data, and technology functions. Create governance structures that bring these perspectives together, clearly defining data ownership, quality standards, and usage policies. These governance mechanisms should balance centralized oversight with distributed execution, empowering local teams while maintaining enterprise coherence.

By taking these steps, digital leaders can begin transforming their data infrastructure to support enterprise-wide AI initiatives. A well-implemented data fabric can address many of the data barriers that have historically constrained AI scaling, creating the foundation for AI-at-Scale and delivering pervasive, high-value AI applications across the organization.